You might waste time, money, and credibility - and still miss the opportunity to truly harness your data. Here’s the real risk: hiring a band-aid role, instead of building a data orchestra.

Below, I briefly explain this in a 3 minute read – and how I might help you get off to a better start.

An Officer title implies accountability and responsibility. But that’s a given. What you need is a Chief Data Orchestrator. Why? They have to adeptly conduct and create brilliant performances from four experiences and skills...let’s call them pillars:

Analytics, AI, and overall Data Science for revealing and getting ahead of financial, operational, and experiential risk + opportunity. This requires deep technical skills involving statistics, modeling, and understanding of computational boundaries to do it.

Data Architecture – your data shouldn’t boss you around. Architecture makes your data accessible, structured, and adaptable for many different contexts.

Data Engineering and Technology for putting together the right technology tools, stack, and flow to deliver just enough data, just in time, with as little resource as possible. This requires a considerable amount of coordination with many different parts of your organization…especially overall technology!

And the fourth - but most importantly:Data Governance and Culture that bridges the distance between the above, and how people use it. It’s not enough to establish rules and procedures for data protection, data use, and data monetization. This is probably the most overlooked role of all – and is the one that the Chief Data Orchestrator must use for the other three pillars of success to work together and thrive.

Hiring a conductor matters as much as hiring virtuosos. Without orchestration, even brilliant individuals create just noise.

Before hiring a CDO, ask yourself:What do we already have across these 4 pillars?

How mature, aligned, and connected are they?

What’s missing?

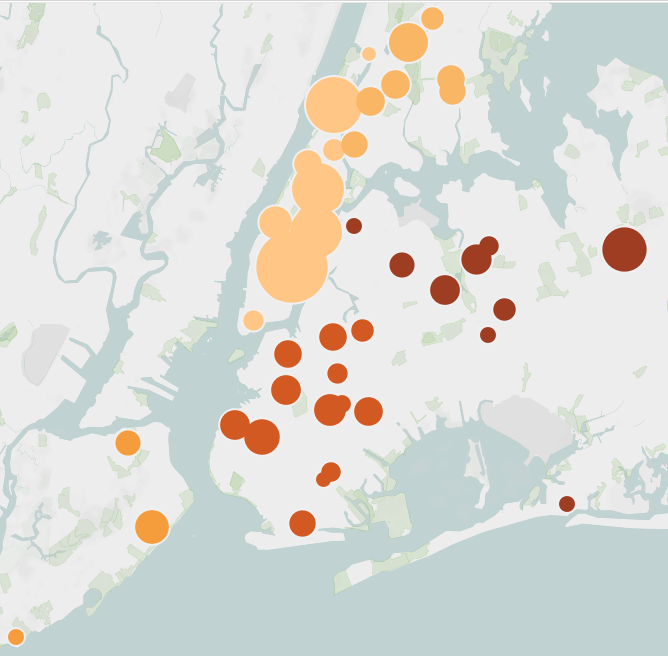

A readiness assessment – one that looks at those three questions - only takes a few weeks to get insights together. And that will prove to be timesaving and valuable. When you know how good your existing platform and talent is for your CDO’s success – your new Chief Data Orchestrator will be successful and grateful for the homework you’ve done.